Many people want better data in Canada. They think it can improve the quality of decision-making – and most importantly – outcomes. This group includes Don Drummond who recently wrote a report on how to improve labour market information, and my colleague Tyler Meredith at the Institute for Research on Public Policy.

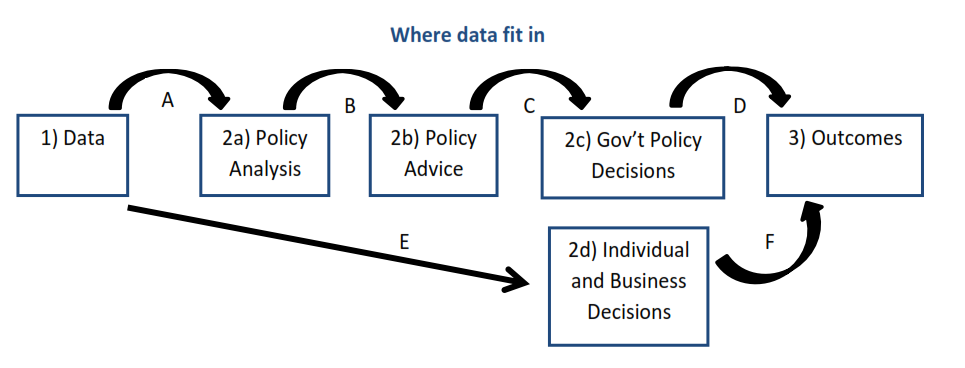

Here’s one way to think about the role of data (Stage 1 in this diagram) and how it might affect outcomes (Stage 3 at the end of the chain). Better data could improve the analysis, advice and decision-making by governments as well as businesses and individuals. Better decisions could then improve outcomes.

It looks like a straight-forward process, but my diagram glosses over important issues, like what happens at the arrows in between the boxes. Which data improve analysis? What if more data add complexity and slow down analysis when timely decisions are needed?

And the arrows in the diagram only move in one direction. In reality, things occur simultaneously and there’s feedback from right to left. Maybe a decision-maker already knows what they want to do and then looks for evidence to justify his or her position? Perhaps the outcomes themselves, or technological change, or evolving business practices, mean that new data are needed to understand what’s happening.

A recent op-ed by Philip Cross (Macdonald-Laurier Institute) raises some related issues. He provocatively argues that we should break our data addiction and reject the belief that data, on their own, solve problems – we shouldn’t assume that data make the world more understandable, decisions less risky and the future less uncertain.

He writes that, “…society strives for evidence-based policy. But what is the evidence that more data always leads to better policy analysis?” This gets at arrow A in my diagram, but note two important distinctions.

In his article, Mr. Cross argues that more data doesn’t always lead to better analysis. That’s certainly true, but it refutes an argument no one made. Instead, most data advocates think that better data will generally improve our understanding of the world. We will make better decisions because of this, and we’ll be better off for it.

While Mr. Cross wants less data-dependence, Lucas Kawa (Business in Canada) wants more clarity on the nature of data-dependence in a different context when he writes, “we have… a central bank with a data-dependent stance failing to clearly indicate which data it’s looking at.”

Unfortunately, neither demand is realistic. The Bank of Canada employs hundreds of economists who collectively turn raw data into analysis and advice. They and other institutions analyze as much information as they can gather – from a variety of sources far beyond Statistics Canada – and they filter it through statistical models and many economists’ brains to generate their best policy analysis and advice.

Instead of this complex process, many central bank watchers would prefer to know with certainty that monetary policy will tighten if, and only if, inflation hits X per cent or the unemployment rate drops below X per cent. The world is too complex to reduce to one or two summary data indicators. Indeed, that’s why we need the work from the hundreds of analysts in the first place.

Both the Federal Reserve and the Bank of England recently tried giving markets a simple data rule for their policy decisions. It failed. The problem is that simple rules are too simple, and they come at the cost of removing discretion and judgment from decision making. Such tacit knowledge is hard to communicate, but it’s where important value is added lies in this process.

The bottom line is that being data-dependent doesn’t mean responding to every wiggle in data. Nor does it mean basing our decisions solely on data or models and nothing else.

Yes, we need better data. But that’s only a start. We also need to ask precise and well-posed questions – of ourselves in our analysis and of our policy makers in their choices – particularly as “big data” increases the availability of non-conventional data sources. In addition, we need to bring new approaches to bare on data, and clearly explain the results to non-specialists.

After concerns about jobs estimates during the Ontario election, let’s hope that the lesson learned by our politicians is not to withhold economic analysis in future campaigns. Instead, let’s hope it causes them to raise their game by presenting more credible analyses.

At the same time, let’s be realistic about what better data can accomplish. This means acknowledging that data give us imprecise measurements of reality, but when used responsibly and creatively, they help us make better choices and hold governments to account for their policy decisions.

Stephen Tapp is a Research Director at the IRPP. Follow him on Twitter @stephen_tapp; e-mail him at: stapp@irpp.org.