Illustration by Meech Boakye

In health care, the promise of artificial intelligence is alluring: With the help of big data sets and algorithms, AI can aid difficult decisions, like triaging patients and determining diagnoses. And since AI leans on statistics rather than human interpretation, the idea is that it’s neutral – it treats everyone in a given data set equally.

But what if it doesn’t?

In October 2019, a study published in the prestigious journal Science showed that a widely used algorithm that predicts which patients will benefit from extra medical care dramatically underestimated the health needs of the sickest Black patients. The algorithm, sold by a health services company called Optum, embodied “significant racial bias,” the authors concluded, suggesting that tools used by health systems to manage the care of about 200 million Americans could incorporate similar biases.

The problem was fundamental: The commercial algorithm focused on costs, not illness. In looking at which patients would benefit from additional health care services, it underestimated the needs of Black patients because they had cost the system less. But Black patients’ costs weren’t lower because the patients were healthier; they were lower because they had unequal access to care. In reality, Black people were considerably sicker. Modifying this algorithm to address the racial disparity would have increased the percentage of Black patients receiving more medical attention from 17.7 per cent to 46.5 per cent.

Ruha Benjamin, a professor of African American studies at Princeton University, frames the problem with the algorithm this way: Behind a veneer of technical neutrality, automated systems “hide, speed and deepen racial discrimination,” she wrote in an article for Science. Even those that explicitly aim to fix racial inequity can end up doing the opposite, she says. “Jim Crow practices feed the ‘New Jim Code.’”

As the world embraces artificial intelligence and machine learning – algorithms that learn to find patterns in data – there’s a risk that many of the tools created won’t treat all people equally, despite their developers’ best intentions. Academics such as Dr. Benjamin are raising these concerns as the United States, Britain, China, Canada and others are racing to dominate the field of AI and health.

In 2017, the Canadian government announced the $125-million Pan-Canadian AI Strategy, partnering with AI hubs in Montreal, Toronto and Edmonton. Toronto’s Hospital for Sick Children appointed its first chair in biomedical informatics and AI in 2019 and, in September, the University of Toronto’s faculty of medicine received a $250-million gift – the largest donation ever to a Canadian university – to fund new initiatives that include a centre for AI in health care.

Hospitals, health care providers and research labs are already using AI applications in a variety of ways: SickKids evaluates two trillion data points of archived intensive-care-unit records in its Goldenberg Lab, dedicated to AI research, to identify problems in patients’ vitals that suggest they’re likely to go into cardiac arrest within five minutes. MEDO.ai, a University of Alberta spinoff company, recently received U.S. Food and Drug Administration clearance to use AI to detect hip dysplasia. Surgical Safety Technologies, a start-up based in Toronto, created the OR Blackbox, which is deployed in Canadian, American and European hospitals, and monitors surgeries to provide insights on making procedures safer.

All of these applications draw on research that began more than 70 years ago when Alan Turing, a mathematician in England, published the 1950 pioneering paper, “Computing Machinery and Intelligence.” In it, he proposed the Turing Test to evaluate whether a computer was “thinking” – the notion foundational to AI.

For some, the idea of machines that can effectively teach themselves to solve problems is an idealistic, almost utopian, vision, conjuring a flawless future in which the most complex medical problems are predicted by automated systems. But medicine’s history leads others to worry that, instead of solving health care’s biggest problems, AI might make them worse by magnifying decision-making biases and inequities that already exist, acting as a distraction from deep-rooted injustices.

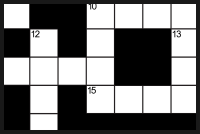

This 19th-century portable spirometer was used to see how much air a person could exhale. Such measurements became part of some dubious racial pseudoscience in North American medicine.Wellcome Collection/Science Museum, London

The spirometer

On March 25, 1999, the front page of the Baltimore Sun displayed an article about Owens Corning, a multinational insulation manufacturer that had gone to court to try to make it harder for African Americans to sue the company for exposing them to asbestos. The manufacturer’s lawyers cited medical evidence that claimed Black people had lower lung capacities than white people – and argued that as a result, they should show they had worse scores on lung tests than white people did in order to qualify for a trial.

Lundy Braun read a syndicated version of the article and thought, “What is this?” As a pathology and laboratory medicine professor at Brown University’s medical school and an African studies professor, she and her students were beginning to talk about racial disparities and absences in the curriculum.

Dr. Braun’s friend, an occupational health physician, explained to her that the idea of different races having different lung capacities was an accepted principle rooted in science. This standard practice dated back to 1844, when a physician-scientist named John Hutchinson presented an elegant apparatus in London that measured “vital capacity,” the maximum amount of air a person could expel from their lungs following a maximum inhalation. He called it the spirometer.

Southern physician, plantation owner and pro-slavery theorist Samuel Cartwright was the first to use the spirometer as a tool to compare Black and white lung function. He estimated that Black men’s lung capacities were 20-per-cent lower than white men’s. (The U.S. statesman and former president Thomas Jefferson had begun exploring the same idea in his 18th-century book Notes on the State of Virginia.) This “racial ideology,” Dr. Braun said in a book she wrote on the subject, Breathing Race into the Machine, “served to explain the subordination of Black people as the natural condition of the Black race.”

The idea gained traction at the end of the Civil War, when president Abraham Lincoln authorized a massive anthropometric study of the Union army. The study, which made use of a number of specialized instruments including the spirometer, concluded that Black lungs had 6 to 12 per cent less “vigour” than white lungs. But it didn’t acknowledge the difference in life conditions between Black and white soldiers, Dr. Braun wrote. The Black men studied had been enslaved in Southern plantations, had poor nutrition, received inferior medical care in segregated hospitals, and endured disproportionate mortality and higher infectious-disease rates – some of which would directly affect lung function.

More than a century later, “race correction” continues in the medical profession. In the United States, spirometers still calculate a Black individual’s lung volume by applying a correction factor of 10 to 15 per cent, although many pulmonologists aren’t necessarily aware of this because it’s incorporated into the spirometer’s technology. When the health provider inputs the patient’s race, it automatically changes their results.

In scientific literature, race correction is often explained by pointing to biologic and genetic differences in Black and white people. But according to the Institute for Healing and Justice in Medicine’s May, 2020, report: “The notions that racial and ethnic differences in lung capacity exist, and that these differences should be programmed into the diagnosis of lung disease, are fundamentally rooted in a history of racism.”

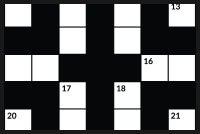

Medical students in Canada are still taught that racial disparities in lung capacity exist. In a March, 2020, lecture at the University of Toronto, first-year medical school students were told that “African Americans have about 15 per cent smaller [lung volumes].” Behind the instructor, a chart was displayed. A line labelled Caucasian was illustrated at the top of the slide, and beneath it, Northeast Asian, Southeast Asian and African American adjustments, respectively. Indigenous peoples were not included.

Race correction is incorporated into a tool used to measure kidney function, too, because “on average, Black persons have greater muscle mass than white persons,” according to a 1999 journal in Annals of Internal Medicine. The paper established one of the equations to estimate Glomerular Filtration Rate (eGFR), often claimed as the best, most routinely used, evaluation of kidney function.

Over the past few years, at least nine U.S. medical schools have abolished the race-based estimation of GFR. Recently, a growing count of 1,970 health care professionals and students signed a national petition to affirm the elimination of race in the assessment of kidney function, calling on the National Kidney Foundation-American Society of Nephrology to stop investing in flawed science.

In Canada, race correction is still used in measuring kidney and lung function, but there is a lot of variation among medical practitioners. It’s not clear how many use it or how it’s used, says LLana James, AI, Medicine and Data Justice Post Doctoral Fellow at Queen’s University. “Race-medicine is not solely about Black people,” James says. “It is also about how white people have used themselves as a primary reference in clinical assessment, and have in so doing, not necessarily tended to the precision of the science.”

However, race-medicine is not limited to the kidneys and lungs. It touches almost every body system, James says. In August, the New England Journal of Medicine published a list of specialties that use race correction in clinical algorithms, which include but are not limited to: cardiology, nephrology, obstetrics, urology, endocrinology, pulmonology and oncology. “Given their potential to perpetuate or even amplify race-based health inequities, they merit thorough scrutiny,” the journal reads. “Many of these race-adjusted algorithms guide decisions in ways that may direct more attention or resources to white patients,” the authors write, “than to members of racial and ethnic minorities.”

A software engineer works at a Beijing lab this past March to design a facial recognition program to identify people even when they wear masks to guard against COVID-19. Facial recognition algorithms are controversial because some have contained built-in biases that make people of certain races harder to be identified accurately.Thomas Peter/Reuters

AI invisibility

Misguided notions of racial difference often percolate inside the field of medicine, perpetuating health disparities. The risk of AI is that it preserves these ideas – and draws conclusions based on them – behind a cloak of invisibility.

Now, when Dr. Braun considers what her research on spirometry means in the context of AI and machine learning, she says, “I worry that we’re not examining the assumptions about race and racial difference that are built into the algorithms.”

Attempts to achieve neutrality in an AI model can sometimes be misguided: The developers who created Optum’s biased tool deliberately excluded markers of race. But their attempt to be neutral failed because race cannot be isolated from the conditions of racism.

Madeleine Clare Elish of the Data & Society Research Institute calls machine-learning tools “socio-technical systems.” The “beliefs, contexts, and power hierarchies” that shape the system’s development cannot be separated from the technology.

By conceptually isolating racism, health and technology as distant islands, oceans away from one another, the root of inequities that leads to health disparities becomes obscured and easily misrepresented.

In 2018, a study was published in a peer-reviewed cancer journal, Annals of Oncology, which stated that deep-learning convolutional neural networks (CNN), a branch of AI that mimics human vision, detected potentially cancerous skin lesions better than 58 dermatologists did.

However, the repository of public-access skin images the developers used to create their screening tool, one of the largest and most frequently used databases of its kind, collects most of its images from fair-skinned populations in the United States, Europe and Australia.

Dr. Adewole Adamson, a dermatologist in Texas, wrote a subsequent opinion piece in The Journal of the American Medical Association that read: “Although there is enthusiasm about the expectation that machine-learning technology could improve early detection rates, as it stands it is possible that the only populations to benefit are those with fair skin.”

At a recent virtual University Health Network lecture, Dr. Benjamin played a clip from the satire sitcom Better Off Ted. In a corporate office, a Black employee is at work late one night when the lights go off. He walks up to the sensor and waves his hands to turn them back on, but the lights don’t respond. The following day, the elevator buttons don’t react to his touch. He leans over to drink from the motion sensor water fountain, but it doesn’t turn on, and a manual fountain is introduced with a sign hanging over it that reads: “Manual drinking fountain (for Blacks).” He discovers that the office’s new “efficient” sensors can only see light skin.

“Indifference to social reality on the part of tech designers and adopters,” Dr. Benjamin said, “can be even more harmful than malicious intent.”

This isn’t science fiction. A few years ago, a Black man put his hand under a soap dispenser at a Marriott hotel in Atlanta. It didn’t respond. Seconds later, a white man put his hand under the same dispenser and immediately received a clump of soap. They repeated this over and over again, posting a video of the experiment online. The same sequence of events recurred.

“Who is seen? Who is heard? And for whom are our digital and physical worlds being built?” Dr. Benjamin asks.

A caregiver holds the hand of an Alzheimer's patient. A University of Toronto researcher's findings on removing age bias from Alzheimer's research is much more complicated with race.Ben Margot/The Associated Press

Exploring solutions

One technical route to approach biased data is to compensate mathematically.

Frank Rudzicz, a faculty member at the Vector Institute for Artificial Intelligence in Toronto, recognizes that all data sets potentially contain biases. To demonstrate, the University of Toronto associate professor points to his research on Alzheimer’s disease.

As you get older, the chances of being diagnosed with Alzheimer’s increases. For this reason, age is a significant influencing factor in the diagnosis. But if you only focus on age, there is a risk of overdiagnosing older people and underdiagnosing younger people.

To explore this problem, Dr. Rudzicz “normalized” the data, removing age, so the algorithm could find unidentified cases. It’s as if a parent and child were sitting on either end of a seesaw and two more children sat on the lighter side. By moving toward the centre, the parent can balance the seesaw, effectively giving more weight to the kids at the other end.

However, with race, Dr. Rudzicz says, normalizing is much more complicated than approaching bias in age because “it could end up actually reinforcing bias or creating new ones. Or, even nothing to do with bias, but it creates some inequality.”

But in some cases, an algorithm is a “black box” and reaches such a high level of complexity that it finds patterns on its own. Data goes in, decisions come out, but the programmer has limited knowledge of what went on in between. In these cases, there’s heightened risk of undetected bias creeping in.

Senior principal Microsoft researcher Rich Caruana has met the black box and has found ways to shine lights inside.

In the mid-1990s, he designed several different algorithms to predict the likelihood of death for patients with pneumonia. One model outperformed all of the others, but it was too risky to use on patients because he didn’t know how it had made its predictions.

So he looked to a more interpretative model, which showed its work by producing a list of decision-making “rules” the algorithm made. This time, he saw that one set of predictions raised a red flag: Patients who had asthma had higher life expectancies. But why would someone with a lung problem be less likely to contract a respiratory illness?

The reason lay in the interpretation: When asthma patients entered the hospital, they often received immediate care, were admitted to the ICU and were seen more frequently. That’s why they had lower mortality rates – not because they were immune to pneumonia, but rather the opposite; they were known to physicians as high-risk.

“This asthma bias,” Dr. Caruana said in a lecture last year, “is very similar to race, gender and socioeconomic status bias.”

As a bioethicist at SickKids whose research focuses on AI, Melissa McCradden studies interpretability and explainability, which explores how to garner trust with the user, such as creating a secondary algorithm that explains how the model arrived at a given prediction.

“If you are affluent and in an urban area and have a good job, you are able to go to health care whenever you want. You never miss a follow-up appointment,” Dr. McCradden says. The result: better quality data.

Those who don’t have a family doctor, ample time to take off work or travel hours to wait at the hospital, and who have experienced discrimination in health care, have fragmented records.

Scholars such as Yeshimabeit Milner, founder and executive director of Data for Black Lives, have drawn links between big data and colonialism. The social determinants that shape health inequities – such as access to housing, education, economic stability and community – never address the “power of colonization,” says James.

Dr. McCradden points out that the “affluent white male” is often considered the standard for most medical conditions. “The rest of us are deviations.”

Aside from the highly technical, difficult-to-understand ways to make AI more equitable, Dr. McCradden has another approach. When developers are conceptualizing and developing AI models to solve health care problems, people who have dedicated their life’s work to study and advocate for racial and social inequalities should have a seat at the table, before the models are deployed and potentially propagate bias.

“I think we really underestimate the extent to which these social inequities and structural inequities actually pervade the data that’s being used to generate these machine-learning models,” Dr. McCradden says.

/cloudfront-us-east-1.images.arcpublishing.com/tgam/U7SPJXMEMBA4DOFRNVQBUWFGTM.jpg) Racial data gaps can be bad for your health

Racial data gaps can be bad for your health

Canada lags far behind other countries in tracking how ethnicity affects health care, the labour market and the justice system, The Globe and Mail found in a 2019 investigation. What are policy-makers missing?

Data collection

Devin Singh, a staff physician in pediatric emergency medicine at SickKids and one of Canada’s first physicians to specialize in clinical AI and machine learning, says that disparities discovered in the research phases of AI models present an opportunity “for machine learning to actually both uncover issues of systemic racism in society and also simultaneously address them.”

In his own research, he is creating an AI model that can predict the tests patients will need, such as an ultrasound or X-Ray, while they are waiting in the emergency department to see a health care provider. His first step is asking, is my machine learning model performing in an equitable way across race, ethnicity, language, gender, age, income status and socioeconomic status?

If you don’t ask this primary question, “you won’t know,” Dr. Singh says. “You would actually be exacerbating racial inequality and propagating it forward, if it existed in the data.”

To determine whether the model is performing in an equitable way, he has to gather data on race and ethnicity to assess bias, which is the phase his SickKids study is currently at. Once he has this data, his model will need to be audited to uncover the systemic barriers in the way health systems are designed. Ziad Obermeyer, one of the authors who dissected Optum’s algorithm, has been creating a playbook that outlines how health systems can examine their own algorithms, like he did, to audit bias.

Taking a step away from data and into the emergency department, Dr. Singh may discover that many patients who don’t speak English as their first language had to request an interpreter to communicate with their doctor. In the process of waiting and speaking through a mediator, a new variable may have been introduced, which could affect their delivery of care and lead to bias in the data. The crucial next phase is ensuring the model hasn’t learned this bias.

But some barriers are already well known. Research has proven, time and again, that systemic racism within the Canadian health care system is a significant contributor to Indigenous and racialized peoples’ health outcomes. Structural racism – dictating health care access – racial discrimination, cultural oppression and the history of colonization continues to result in health disparities.

In her research, James has closely studied the collection of race and ethnicity data in Canada and found that there is often a misguided perception that by collecting more and more race and ethnicity data, you can measure racism. “But race is not a proxy for racism,” says James, “Really, what we want to examine is if there is equal access. Is racism acting as a barrier?”

In October, Dr. Singh played host to a virtual University of Toronto medical school lecture for second-year students on clinical AI and the future of pediatric practice. He presented a bar graph in which one bar towered over the others, representing a hypothetical scenario where the model was making mistakes for one particular group of patients.

Then he asked the class a question: “What if this error bar actually represents race?”

If it represents a particular racial group, and if no bias assessment is conducted before the model is deployed across Canada, “we will systematically … propagate systemic bias in our health care moving forward,” he said. “It’s terrible.”

From their homes, over Zoom, the class of future physicians silently listened. “We know that bias is existing in our health care systems and so by identifying it with the machine-learning model, we can actually counteract that,” says Dr. Singh, “undoing the biases that exist systemically.”

Dr. Benjamin presents a proposition, too. “Given that inequity and injustice are woven into the very fabric of our society … that means that each twist, coil and code is a chance for us to weave new patterns, practices and politics.”

Our Morning Update and Evening Update newsletters are written by Globe editors, giving you a concise summary of the day’s most important headlines. Sign up today.

Hannah Alberga

Hannah Alberga